Paper-based tests cannot assess the new discipline knowledge and new skills and competences that are made possible by digital technology, which is why Luckin et al (2012 p.64) identified that “Much existing teaching practice may well not benefit greatly from new technologies”. Referring to paper-based assessment Jencks (1972 in Gardner 1999 p.97) noted that “there is ample evidence that formal testing alone is an indifferent predictor for success once a student has left school”; this lack of authenticity has been exacerbated by the changes that digital technology has had on society. Digital technology-enabled testing is being adopted in many sectors, including HE and FE, but has had very little impact on high stakes assessment in schools in the UK (though it is being implemented in the USA, Finland and Australia).

Twining et al (2006 p.20) discussed problems with current (paper-based) assessment models in their report for Becta on the Harnessing Technology Strategy:

There is strong support for the view that we are using the wrong ‘measures’ when it comes to evaluating the benefits of ICT in education (for example Hedges et al 2000; Heppell 1999; Lewis 2001; Loveless 2002; McFarlane 2003; Ridgway and McCusker 2004). Heppell has long argued that the ways in which we assess learning that has been mediated by ICT is problematic, and has illustrated the problem using the following analogy:

‘Imagine a nation of horse riders with a clearly defined set of riding capabilities. In one short decade the motor car is invented and within that same decade many children become highly competent drivers extending the boundaries of their travel as well as developing entirely new leisure pursuits (like stock-car racing and hot rodding). At the end of the decade government ministers want to assess the true impact of automobiles on the nation’s capability. They do it by putting everyone back on the horses and checking their dressage, jumping and trotting as before. Of course, we can all see that it is ridiculous.’ (Heppell 1994 p154)

It seems clear that current assessment practices do not match well with the learning that ICT facilitates (for example Ridgway and McCusker 2004; Venezky 2001) and there is strong support for the need to change how we assess learning in order to rectify this problem (for example Kaiser 1974; Lemke and Coughlin 1998; Lewin et al 2000; McFarlane et al 2000; Barton 2001; ICTRN 2001; Trilling and Hood 2001).

Assessment is linked to accountability in schools, which results in assessment being a key driver of schools’ behaviours and practices. Evidence from the 22 Vital Case Studies in English schools (Twining 2014a) and the 13 Snapshot Studies in Australian schools (Twining 2014b) indicates that current (paper-based) high stakes assessment inhibits the use of digital technology in schools. Schools that were making extensive use of digital technology reported that there was a mismatch between what they were doing and what their students would be assessed on. This meant that they reduced their use of digital technology and moved back to traditional forms of teaching about three months before high stakes national assessments. At the most basic level this was to allow their students to develop their hand writing muscles so that they could write for up to six hours per day during the exam period. More fundamentally they needed to teach their pupils how to write exam answers on paper, as the process is different to word-processing your answers (even if the spell checker and grammar checker are disabled). This provides a disincentive for schools to invest in digital technology (as it will not be fully utilised for a high proportion of the time).

A great deal of work on digital technology-enabled assessment in schools took place in England prior to 2010. However there has been little growth in digital technology-enabled testing in schools since then. This reflects changes in the political and regulatory climate that increased the risk to exam boards of carrying on working in this area. However, with active support from the DfE and Ofqual exam boards would be keen to progress on-screen testing for GQs in schools.

Preliminary discussions to explore the issues surrounding the development of technology enabled summative testing in secondary schools have already taken place with representatives from the following stakeholder groups: the Department for Education (DfE), Ofqual, Exam boards (e.g. AQA, Pearson), DRS (a company who handle over 9 million GCSE scripts per year on behalf of exam boards), the British Educational Suppliers Association (BESA), educational suppliers, providers of computer based assessment systems (e.g. BTL; Pearson), organisations in other countries who are exploring this area (e.g. The German Institute for International Educational Research, who developed and implemented computerised assessments for PISA).

We have been liaising with Andrew Fluck who developed the Tasmanian eExam system and have prototypes of demonstration exams. We have also obtained copies of Digabi (the system being developed in Finland). In both these cases computers are booted up from the flash drive, which runs a version of Linux and prevents access to the hard drive, the Internet and other ports. This reduces the risk of students cheating and ensures that they all have access to ‘the same’ environment and software on their machines. Both these flash drive based systems need further development, particularly in relation to demonstration examinations and the logistics of how they would be deployed at scale in schools in the UK. BTL and Pearson already have expertise and systems that could address many of the logistical and pedagogical challenges associated with digital technology-enabled testing in schools.

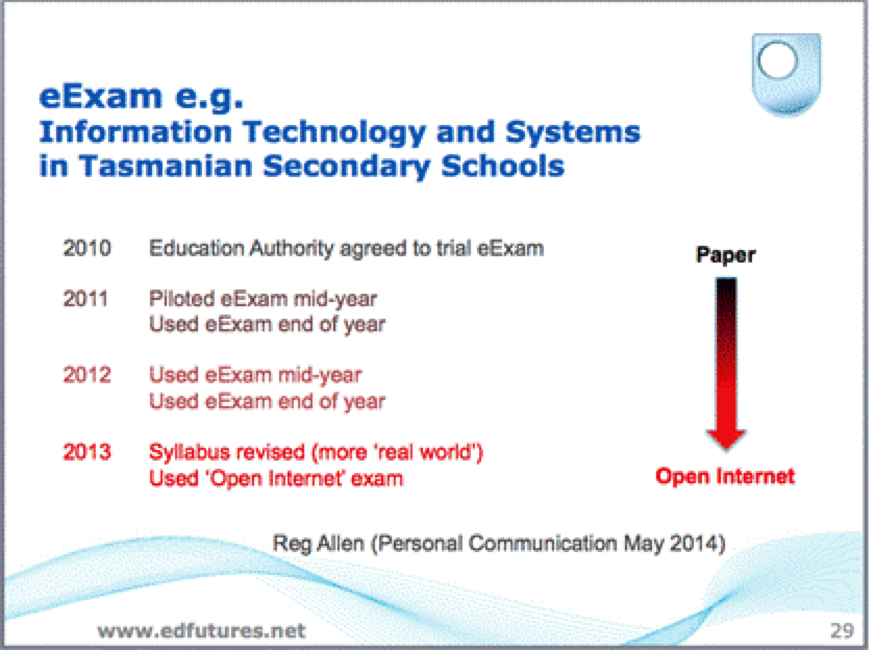

One of the problems with previous attempts to implement digital technology-enabled testing in schools is that they attempted to address too many issues simultaneously. The eExam system includes a successful change management strategy that allows schools to initially focus on the technical and logistical challenges, before having to deal with the pedagogical issues and concerns about the reliability and validity of new forms of assessment. Figure 5 provides an overview of the stages that the Tasmanian Department of Education went through when they trialled the eExam system in high stakes exams (specifically Information Technology and Systems) in their secondary schools. The eExam approach builds on the use of students’ own technology, reflecting the growth in Bring Your Own (BYO) strategies in schools. Complementing this with a paper-replication strategy, in which the same exam can be taken on paper or on-screen goes a considerable way to addressing concerns about the technical infrastructure in schools, and risks associated with technology failure. This is a transition phase to simplify the challenge of moving from paper-based to digital technology-enabled testing that utilises the full potential of the digital technology.

Figure 5 Overview of the development of the Tasmanian pilot of Andrew Fluck’s eExam system (Twining 2014c)

Evidence from the Tasmanian trials and from the Your Own Technology Survey in England (see http://www.yots.org.uk) suggests that a significant proportion of secondary school students already have access to mobile devices that they could use in school. This reduces the burden on schools to provide computers for the digital technology-enabled exams, however, there would continue to be a need for them to support students who did not have their own devices and who wanted to take the paper-based exam. The paper-replication approach also makes it possible for some students to do the exam on paper if they prefer. Indeed, one of the fail-safe elements of the approach is that should there be a technical problem with a machine then the student can continue the exam on paper (and the answers they have already provided will be saved on their flash drive). Where spelling and grammar are important the spelling and grammar checkers on the computers can be disabled.

As already noted, paper-replication is not the intended end-point, and there is a danger that the move to effective digital technology-enabled assessment might stall at this point. This risk needs to be balanced against the practical challenges of moving directly from paper-based to full-blown digital technology-enabled assessment in schools.